Imagine this, it’s 10:02 AM. A customer initiates a routine transfer. 10:03 AM. An AI agent quietly flags a subtle anomaly—a new device fingerprint plus a high‑risk beneficiary pattern—and intervenes before $50,000 leaves the account. No delays, no drama—just intelligent protection.

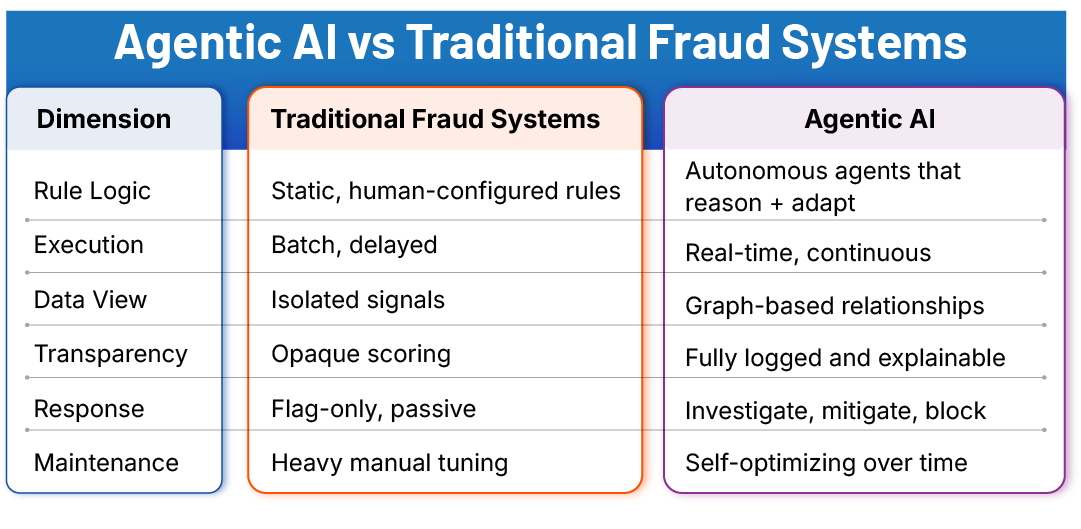

That’s Agentic AI for Fraud Detection: autonomous, goal‑driven agents working in real time to safeguard transactions, protect customers, and keep compliance airtight.

Why Agentic AI for Fraud Detection Matters Now

Fraud is cross‑channel, adaptive, and networked. Traditional rules engines can’t keep up, and banks are accelerating AI adoption to detect anomalies, automate controls, and improve experience (see Gartner’s 2024 finance AI adoption survey for context and use cases like intelligent automation and anomaly detection: read the press release). Regulatory pressure is rising too: the UK Payment Systems Regulator now mandates reimbursement for APP scams (from 7 Oct 2024), which incentivizes proactive scam prevention with minimal friction—an overview for consumers and a PSPs quick guide on rules and timelines (read the guide).

Agentic AI for Fraud Detection goes beyond static ML by orchestrating multiple agents that adapt to new patterns, combining streaming telemetry with graph intelligence, and generating explainable actions—reducing false positives while improving customer experience. For technical depth on graph approaches that expose mule networks and reduce false alarms, see NVIDIA’s blueprint for GNNs in financial fraud (read the article) and a 2025 academic review of GNNs for financial fraud detection (open‑access review).

A Step‑by‑Step Implementation Framework

1) Define Risk & Regulatory Context

Map AML/CFT obligations, APP reimbursement rules, privacy mandates, and AI governance. Use NIST’s AI Risk Management Framework (AI RMF) to operationalize trustworthy AI via Govern, Map, Measure, Manage—AI RMF 1.0 and the Playbook for practical steps (view the playbook). Align model governance with SR 11‑7 on Model Risk Management—read the supervisory letter—and its AML extension (interagency statement). For broader supervisory themes (explainability, data risk, resilience).

2) Prioritize High‑Impact Use Cases

Start with:

Real‑time transaction monitoring (streaming scores + intelligent friction).

APP scam interdiction (nudges, holds, split liability per PSR).

Graph‑based mule detection (fan‑in/fan‑out motifs; community detection with GNNs).

Agent‑led AML investigations (RAG‑assisted triage and SAR drafting with human approval).

3) Build Data Readiness

Unify transaction, device, and identity signals with lineage and encryption. Enrich authentication via EMV 3‑D Secure device data and frictionless risk‑based step‑ups—EMVCo technical features and Mastercard Identity Check overview (learn more).

4) Architect Your Agents

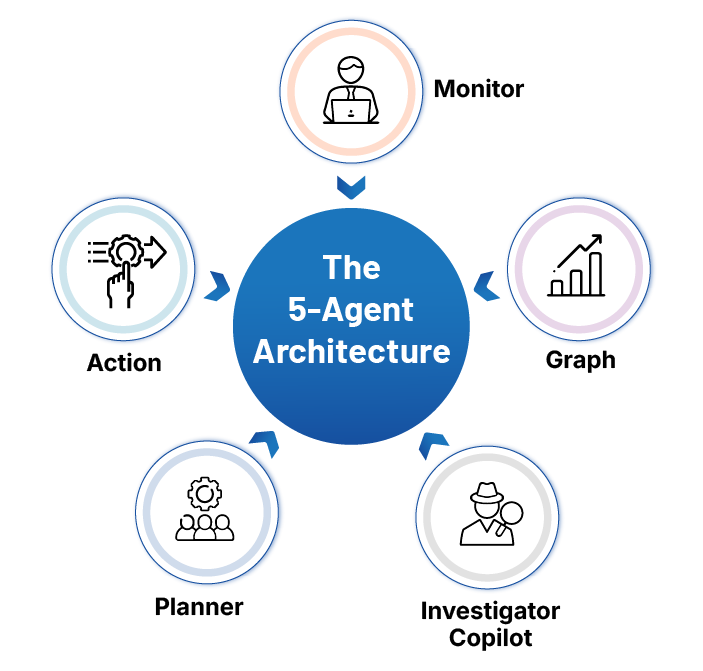

Orchestrate five cooperating agents:

- Planner – Sets objectives, composes policies from NIST AI RMF.

- Monitor – Ingests streams; scores risk; triggers compliant actions.

- Graph – Maintains entity graph; runs GNNs to detect mule clusters (see the NVIDIA article above and academic review link).

- Action – Executes holds, step‑up auth (EMV 3DS), beneficiary checks; logs rationale.

- Investigator Copilot – Summarizes cases, links entities, drafts SAR notes, and ensures human-in-the-loop is consistent with SR 11‑7 validation standards (SR 11‑7).

5) Embed Governance

Make explainability, bias audits, and cybersecurity hardening non‑negotiable. Use NIST AI RMF for measurement/drift control and Basel’s digitalization guidance for operational resilience. Maintain model cards, lineage, and validation artifacts per SR 11‑7.

6) Human‑in‑the‑Loop

Operate semi-autonomously with a clear escalation matrix of playbooks, dual control for irreversible actions, and auditable decision provenance (see interagency AML statement: read).

7) Pilot, Measure, Scale

Run a 90‑day pilot; measure KPIs (loss avoidance, false positives, SAR cycle time) and scale across rails. Where collaboration is needed without sharing raw data, use privacy‑preserving federated learning to train models across institutions—IBM Research case study and a 2025 vertical FL AML study (open access).

Measuring ROI

- Loss Avoidance: Fewer fraud losses and APP reimbursements under PSR (see rules and timeframes: PSR overview).

- Efficiency Gains: Reduced false positives; faster investigations—supported by graph intelligence and explainable ML (NVIDIA GNN article).

- Regulatory Alignment: Audit‑ready logs, explainability artifacts, and MRM documentation.

- Customer Experience: Intelligent friction via EMV 3DS—more approvals, fewer declines.

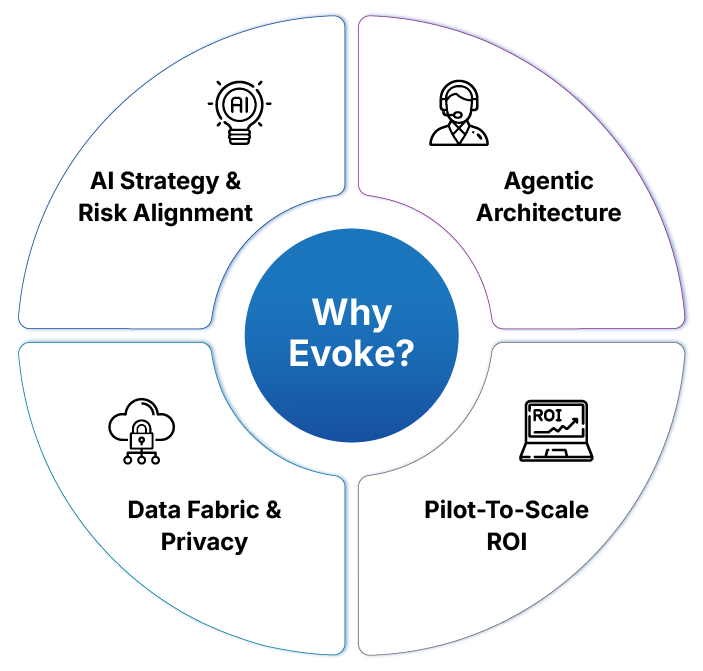

Why Evoke?

We help BFSI leaders design, deploy, and govern Agentic AI ecosystems that deliver measurable fraud reduction and compliance confidence:

- AI Strategy & Risk Alignment

- Agentic Architecture

- Data Fabric & Privacy

- Pilot‑to‑Scale ROI Dashboards

We align Agentic AI for Fraud Detection strategy to regulatory frameworks, unify data across transactions, devices, and identities, and deliver explainable, audit‑ready decisions. We help BFSI organizations cut losses, reduce false positives, and improve customer trust. Are you ready to future-proof your fraud prevention strategy? Contact us now.